Deep learning for robotics in agile production

10 March 2023

Robot manipulators in agile production environments are tasked with repetitive actions that require high precision. Typical examples of repetitive tasks are grasping and assembly for the automotive industry. Most of these industrial robots are position controlled, to ensure a high accuracy and high precision of end-effector motion. Tasks such as object pick and placement are suitable for such robot control, as the task is well-defined and external disturbances are unlikely to occur. That is, consecutive objects are known, path motion does not change and contact to a rigid surface is either planned or not included.

Recent advances in robotics also allows for the collaboration between humans and robots in performing shared tasks in a safe way. In this context, where robot and human share the same workspace, adaptation from both the human and the robot is required such that they could work together as smoothly as possible meanwhile capturing the best capabilities from each side. Interfaces to ease robot programming and enable human-robot interaction are an inherent part of this, by techniques such as learning from demonstration, web-based interfaces, and visual perception. User experience design should then ensure efficient collaboration and acceptance from the human operator.

Within OpenDR, Tampere University has worked on the use case in agile production, where a human and robot work in close collaboration to achieve the shared task of Diesel engine assembly. To enable this, different tools from the OpenDR toolkit have been integrated and are being evaluated. These include (see figures):

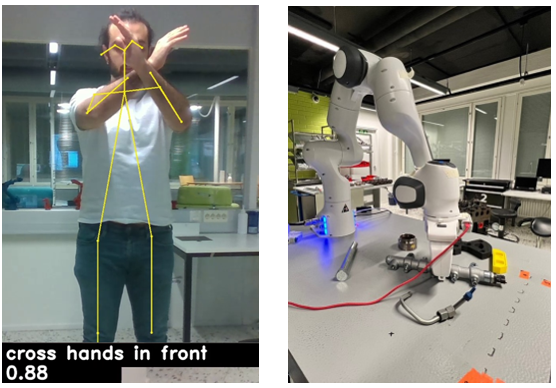

- human detection

- human action recognition

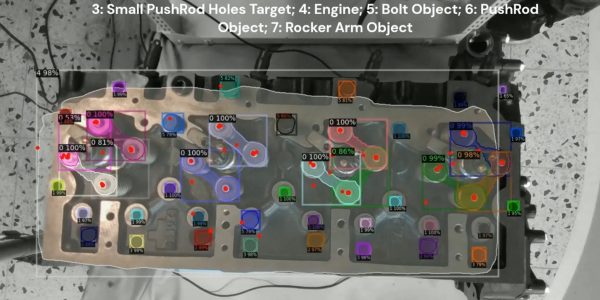

- object and target detection

- object grasping

All these tools and their datasets are available in the toolkit and the dedicated dataset repository:

Development of these tools requires expertise in many different topics. To ease the transfer of knowledge from the tool developers to industry, and to promote uptake, DIH TRINITY has uploaded the use case and the different modules to its Digital Access Point:

https://trinityrobotics.eu/use-cases/sensor-based-human-robot-collaboration/

Fig. 1 – Human detection (skeleton in yellow) and human action recognition.

Fig. 2 – Robot grasping a Diesel engine part.