Human-centric Perception in OpenDR

11 October 2021

The first OpenDR brief highlighted the role of Artificial Intelligence in changing many aspects of our everyday lives. It also cited the role of Machine Learning and specifically Deep Learning which have been the main drivers of the new technologies. Recall that OpenDR’s ambition is to enable deep learning to be efficiently and successfully integrated into robotics applications, including the three use cases of healthcare, agri-food and agile production, via a modular, open and non-proprietary deep learning toolkit for core robotic functionalities that will provide advanced perception and cognition capabilities.

A major focus of OpenDR this year has been on deep human centric active perception and cognition. When we, humans, perceive a scene, we are active observers, that is, we move around, change our viewpoint, get closer to farther away, turn the lights on if we are in dark scene, and so on, so that we are able to depict and understand or find what we are looking for. Likewise, we want to equip robots with a similar ability to view the surroundings and act upon it. An illustration of active vision by humans is when a parent is trying to locate their child among a very large crowd covering a large area, such as Fig. 1 below.

Fig. 1 Finding your child in the crowd.

So, without moving around and checking closely each corner, and perhaps even shouting the name of the child, it would be almost impossible to find the child simply by looking at the picture!

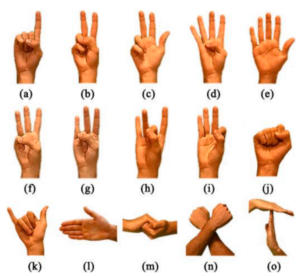

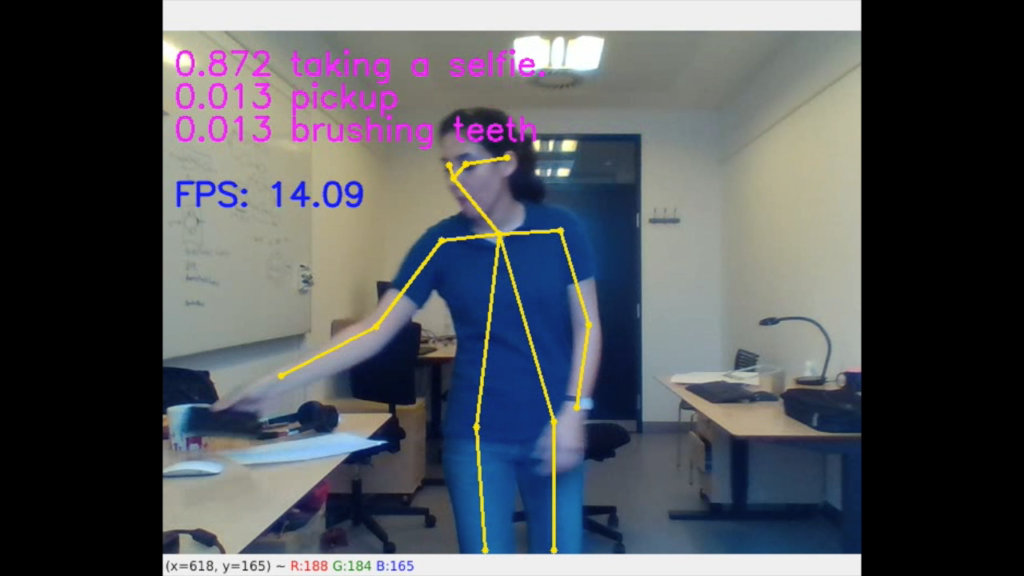

OpenDR aims to equip robots with the ability to recognize human actions, as well as human speech (commands), and then act upon them to perform a certain task, see Fig. 2 and 3 below for an example of hand gesture and human pose recognition.

Fig. 2 Hand gesture recognition

Fig. 3 Human pose recognition

OpenDR’s face recognition module can also detect and recognize persons appearing in a scene. The robot we used for our preliminary experiments, called JetBot, can reposition itself with respect to the person in the scene in order to increase the recognition confidence, providing an effective active perception approach. Face detection algorithms, such as RetinaFace, have been integrated in the toolkit, allowing for detecting faces, even when face masks are used. OpenDR will further investigate active perception for human-centric robot perception enabling them to perceive and interact with their surroundings similar to humans.

Fig. 4 RetinaFace developed in OpenDR detects faces, as well as whether a face mask is used.

Authored by: Moncef Gabbouj, Jenni Raitoharju, Anton Muravev and Kateryna Chumachenko

Tampere University, Finland